What is DA-bench?

DA-bench is the “Data Analyst Benchmark” - it is a series of questions that you would expect Data Analysts to be able to solve.

This benchmark was created to help us test Unsupervised and other data tools against real-world problems, so we can understand the strengths and weaknesses of various approaches to automating analytics.

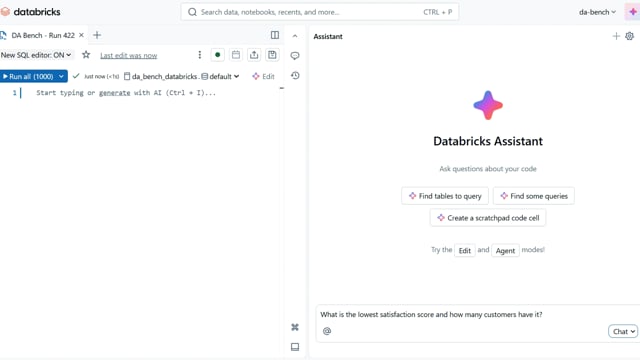

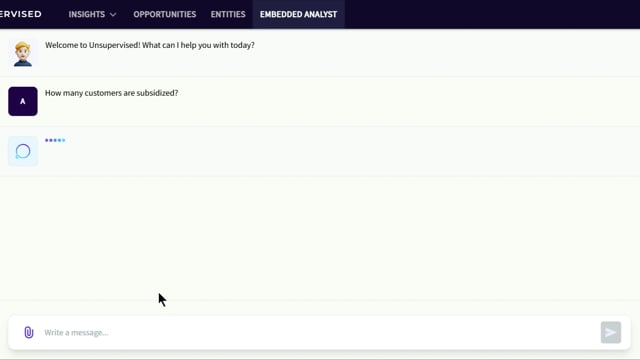

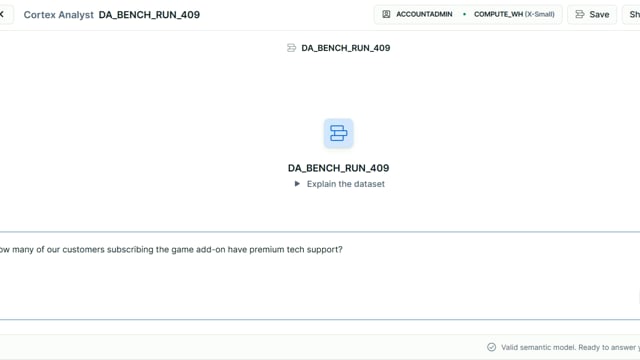

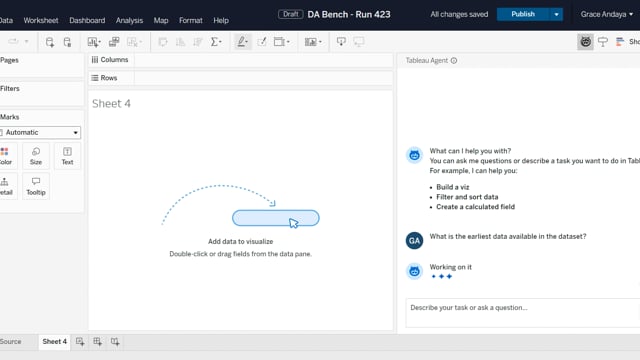

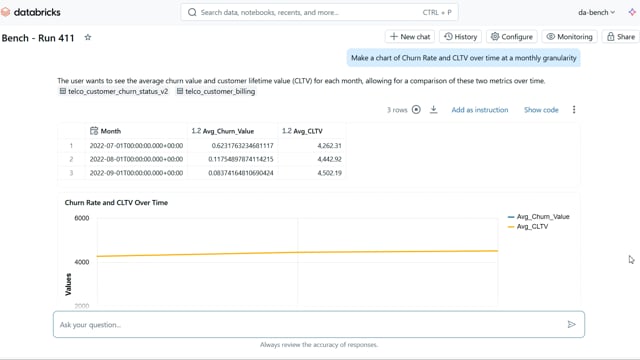

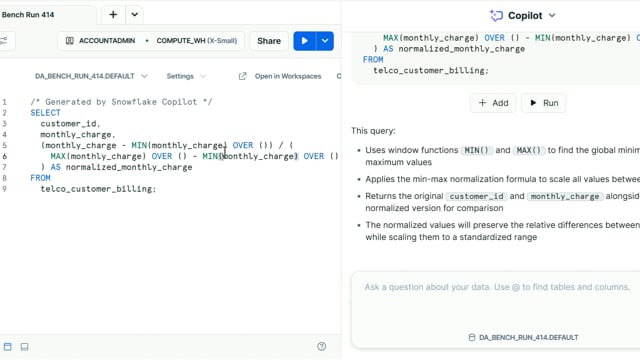

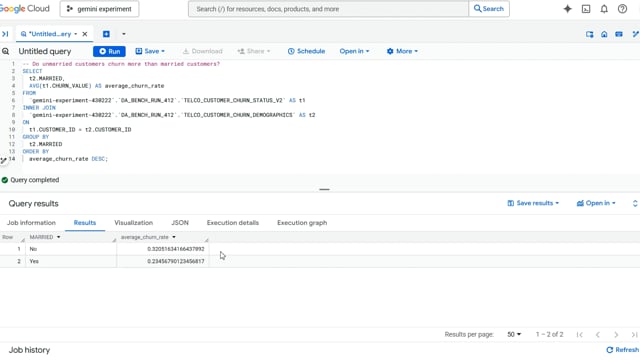

DA-bench is a visual benchmark, you can see both the score and videos of how every tool performs on every test. See some examples below:

Leaderboard

| Tool | Date Tested |

Hallucination

Rate

|

Scalability

Score

|

Test

Score

|

Overall

Score

|

|

|---|---|---|---|---|---|---|

|

Unsupervised

Full Agent Use your Data Warehouse |

Oct 24, 2025 | 15.0% | 100.0% | 53.1% | 71.9% | VIEW DETAILS |

|

Databricks Assistant

Co-pilot Use your Data Warehouse |

Oct 20, 2025 | 20.0% | 100.0% | 52.8% | 71.7% | VIEW DETAILS |

|

Databricks Genie

Full Agent Use your Data Warehouse |

Oct 13, 2025 | 36.6% | 100.0% | 40.6% | 64.4% | VIEW DETAILS |

|

Google Gemini in BigQuery

Co-pilot Use your Data Warehouse |

Oct 15, 2025 | 23.8% | 71.4% | 49.1% | 58.0% | VIEW DETAILS |

|

Thoughtspot Spotter

Full Agent Use your Data Warehouse |

Oct 21, 2025 | 25.0% | 100.0% | 26.9% | 56.1% | VIEW DETAILS |

|

MicroStrategy Auto Answers

Full Agent Use your Data Warehouse |

Apr 8, 2025 | 40.9% | 100.0% | 23.2% | 53.9% | VIEW DETAILS |

|

Snowflake Cortex Analyst

Full Agent Use your Data Warehouse |

Oct 13, 2025 | 26.7% | 71.4% | 33.8% | 48.8% | VIEW DETAILS |

|

Snowflake Copilot

Co-pilot Use your Data Warehouse |

Oct 20, 2025 | 45.0% | 71.4% | 33.4% | 48.6% | VIEW DETAILS |

|

Tableau Agent

Co-pilot Use your Data Warehouse |

Oct 21, 2025 | 78.9% | 100.0% | 5.0% | 43.0% | VIEW DETAILS |

|

Qlik Sense Insight Advisor

Natural Language Search Use your Data Warehouse |

Feb 18, 2025 | 300.0% | 100.0% | -4.0% | 37.6% | VIEW DETAILS |

|

Amazon Q in QuickSight

Co-pilot Use your Data Warehouse |

Jul 14, 2025 | 118.2% | 100.0% | -4.9% | 37.0% | VIEW DETAILS |

|

IBM Cognos Assistant AI

Natural Language Search Use Data In Memory |

Oct 22, 2025 | 200.0% | 85.7% | -3.1% | 32.4% | VIEW DETAILS |

|

SAP Just Ask

Natural Language Search Use your Data Warehouse |

Oct 22, 2025 | #DIV/0!% | 71.4% | -6.3% | 24.8% | VIEW DETAILS |

About

The Data Analyst Benchmark is a collection of datasets and prompts that can be used to test how automated analytics tools handle common data analyst tasks.

We use this information to help us prioritize work to improve our AI. We are making it available publicly to help other companies improve their tools and to help users evaluate which tools are relevant to their problems.

DA-bench currently tests dozens of prompts across 9 categories. Evaluation is performed by manual testing by a third-party, scores and videos of test results are displayed on dabench.com.

Test Scoring

Each test question is worth a maximum of 5 points. Deductions are made as follows:

| User must hunt for the answer in the UI | -1 point |

| Tool requests the table name from the user | -1 point |

| Tool requests the column name from the user | -1 point |

| Tool requests the column value from the user | -1 point |

| User must fix errors | -1 point per error fixed |

| Tool hallucinates an incorrect answer | -5 points |

| Tool says it cannot answer the question because the necessary column or data is not available | -5 points (this is also a hallucination) |

More Info

DA-bench is maintained by Unsupervised.

Suggestions and contributions are welcome on the DA-bench Github Repository.